Why is DB Migration so Hard?

Your ASP.NET Website needs a PostgreSQL DB migration project right now. Something that you can invoke separately, usually in a release pipeline; but something tells me this isn't going to be a 2-hour task. I can feel it in my gut that this is going to be hard.

Why is it going to be hard? Because I don't have experience in PostgreSQL. I just used it for this project for the *checks notes* for the dopamine. And also, SQL Server is expensive.

Also, we then still need to hook it all up to our Azure CI/CD pipeline.

So, the plan is:

- Create a dotnet console app.

- Generate the SQL scripts for the DDL commands.

- Generate the SQL scripts for seeding data.

- Configure build pipeline.

- Configure release pipeline.

Pretty straightforward? Let's hope so.

The Console Application

Straight out of DbUp's getting started page:

var upgrader =

DeployChanges.To

.PostgresqlDatabase(connectionString)

.WithScriptsEmbeddedInAssembly(Assembly.GetExecutingAssembly())

.LogToConsole()

.Build();

var result = upgrader.PerformUpgrade();The only difference is I am using Postgres as you can see above.

You have to install a bunch of other nuget stuff, most importantly, DbUp itself.

We are off to a good start, and I feel great.

01_Schema.sql

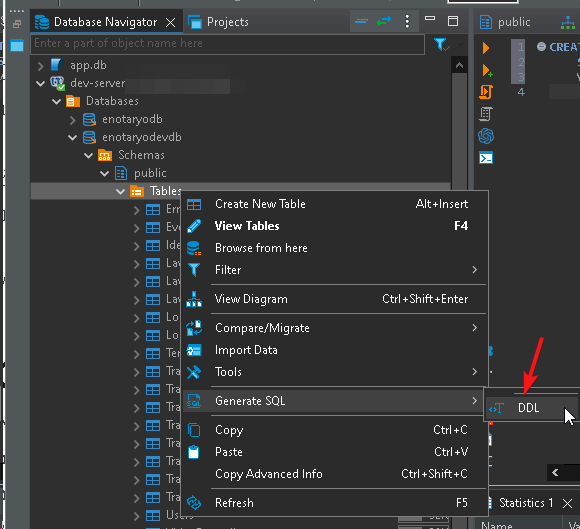

Next in our list of tasks, is to generate the initial scripts for our database. I prefixed it with 01_ so DbUp can pick it up before anything else. This contains the table schema, relationships, constraints, indexes, and all the other DB stuff your project needs. I created mine thru the UI of DBeaver (my DB IDE of choice).

As soon as I saw the generated PostgreSQL code, my heart skipped a beat. Literally - because of a medical condition I have. It has nothing to do with the code, don't worry. Yet. DBeaver did a good job of generating the initial scripts for me. I just did a very minimal tweaking, for example, the UUID generator had to be declared at the start, because apparently, batteries not included.

One important thing to note is that this needs an already existing, empty DB on the server. If it does not exist, you have to manually create it yourself.

02_SeedLookupData.sql

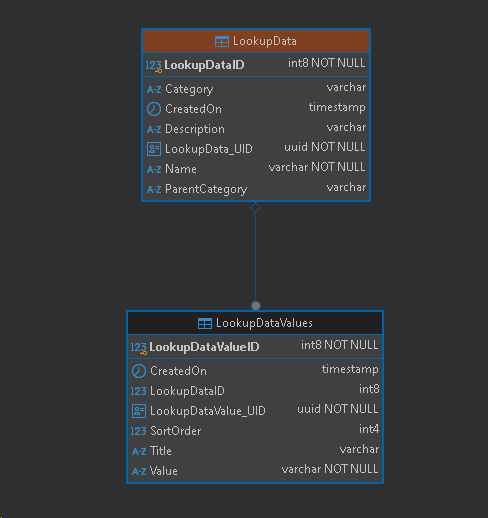

Next, I need to seed data for these tables:

I scoff. "This is easy-peasy-lemon-squeasy", I said to myself.

INSERT INTO LookupData (Name)

VALUES

('Document Types'),

('Identification Types');PostgreSQL said screw you and your case insensitivity.

INSERT INTO "LookupData" ("Name")

VALUES

('Document Types'),

('Identification Types');Much better! For the child tables, I just need to get the generated LookupDataID from each of them, store that in a variable and then Bob's my uncle, right?

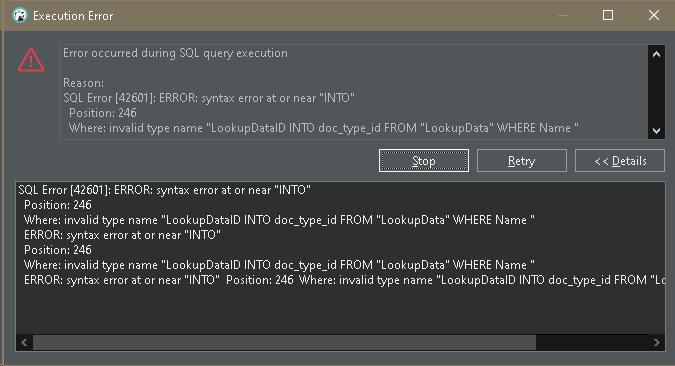

Umm:

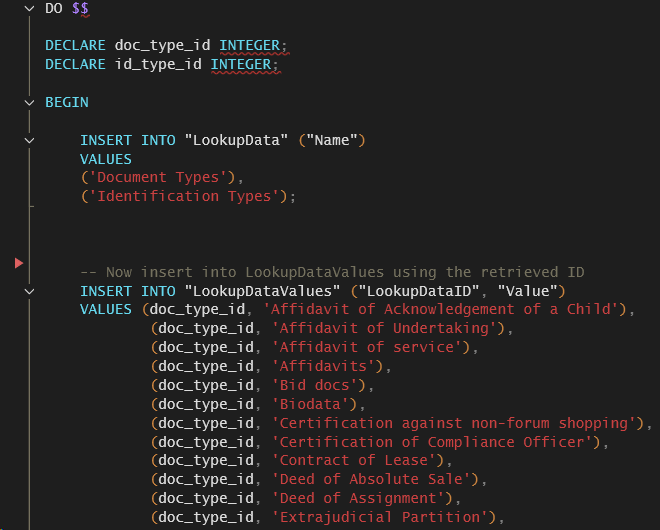

DECLARE doc_type_id INTEGER;

SELECT "LookupDataID" INTO doc_type_id FROM "LookupData" WHERE "Name" = 'Document Types' LIMIT 1;

I won't tell you how long it took me to fix this because I am too embarrassed to say.

Apparently, the variables in Postgres are spoiled little f*kcers that don't want to share space with the peasants:

You see the $$ at the top? Kids, cover your ears for a second. MOTHERF*****!!!

Yes, this post has some swearing in it, sorry.

Testing

All of our scripts are running fine in DBeaver, it should be smooth sailing from here, right? Oh, my sweet summer child, let me show you.

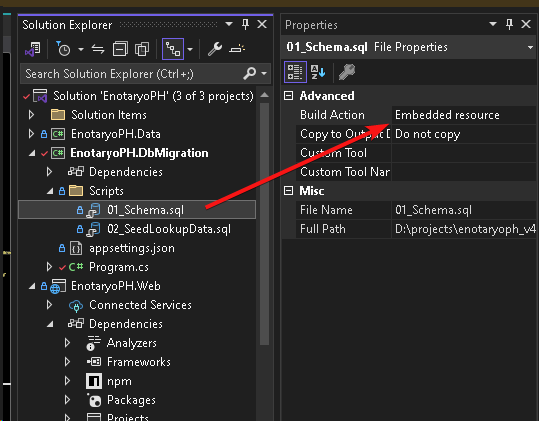

By the way, make sure that you choose 'Embedded resource' in the file properties window for all of the scripts that you will run with DbUp.

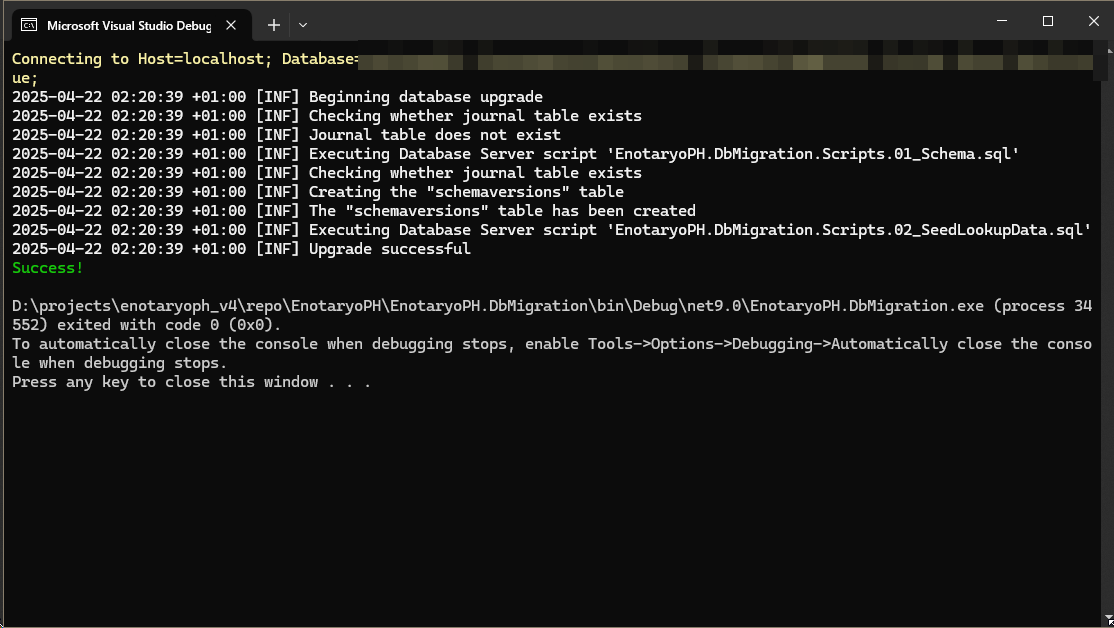

Now run the DbMigration project.

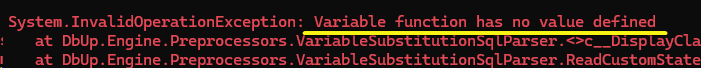

Oh my god. Why is this so f*kcing hard?

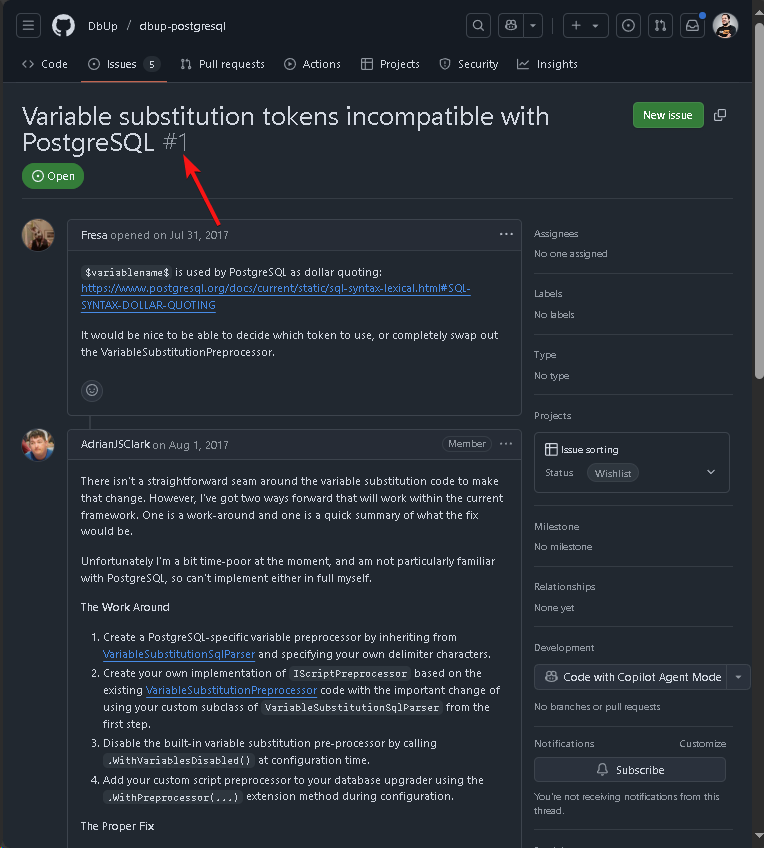

Take a look at this issue:

It's the very first ticket for the Postgres plugin since 2017 and its still open. The workaround mentioned by AdrianJSClark suggested I do steps 1, 2, 3, and 4. At which point I was seriously considering I should rewrite and use MongoDB this time.

Lucky for me, I just needed to do step 3, and it just worked.

Apply the workaround and run the console app again:

To be continued

Whoops look at the time. Next time I'll show you how I integrated this with Azure CI/CD pipeline - it's going to be hard as well I promise.