Wow. A Self-hosted and CPU-only AI Instance.

Self-hosted? CPU only? Sign me up! Let's install this very promising app today.

On my personal dev machine, I am running LM Studio and Ollama side by side, just so I can compare both. I also have the best budget choice for running local LLMs, the Nvidia GTX 3080ti. But this is my desktop computer, I only turn this on whenever I use it. I however, am running a HomeLab. It is a repurposed SFF Intel Core i5 I snagged at Facebook Marketplace for cheaps. I run almost everything in it.

So, when I stumble upon something that says on the label:

🤖 The free, Open Source alternative to OpenAI, Claude and others. Self-hosted and local-first. Drop-in replacement for OpenAI, running on consumer-grade hardware. No GPU required.

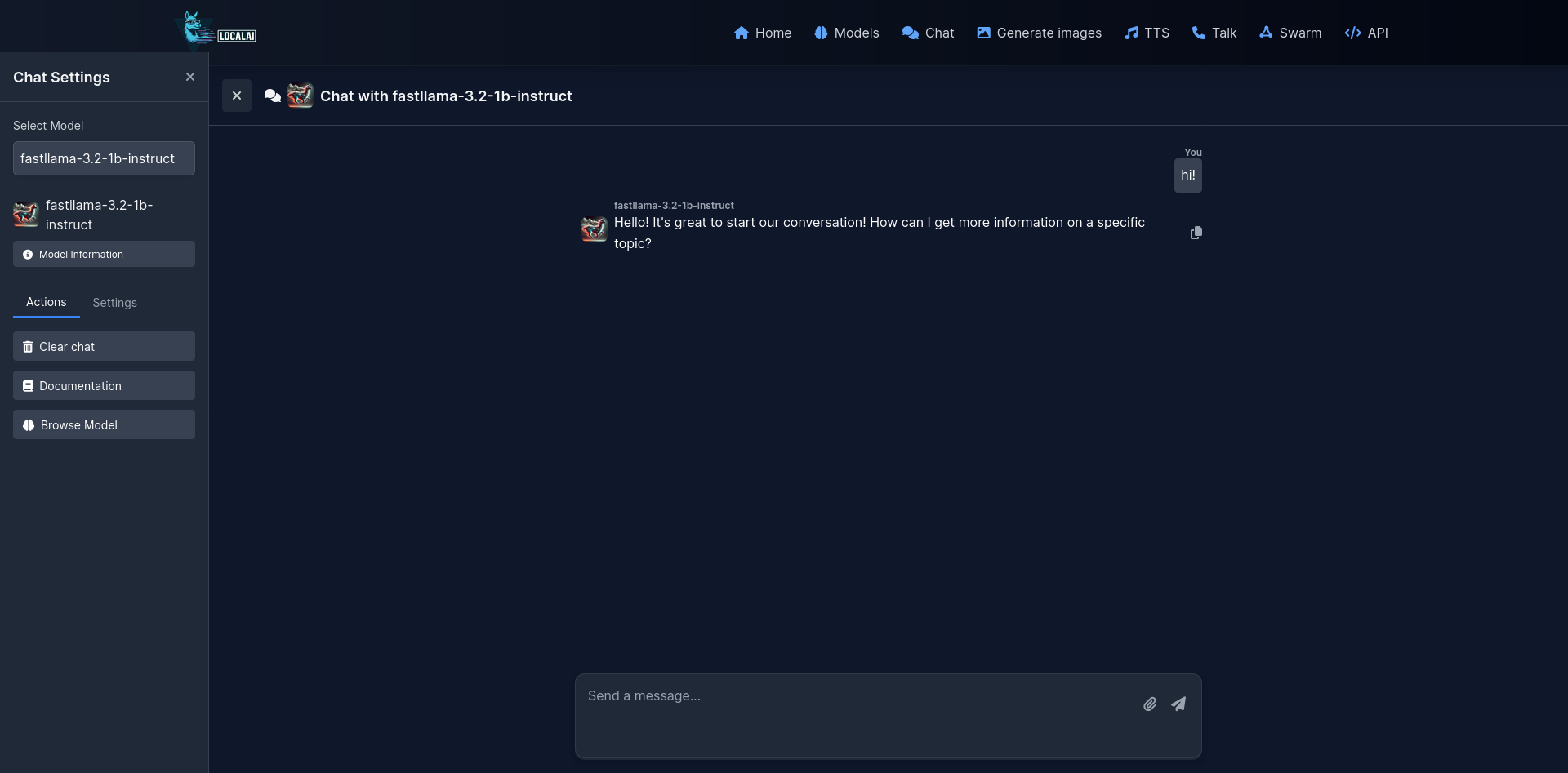

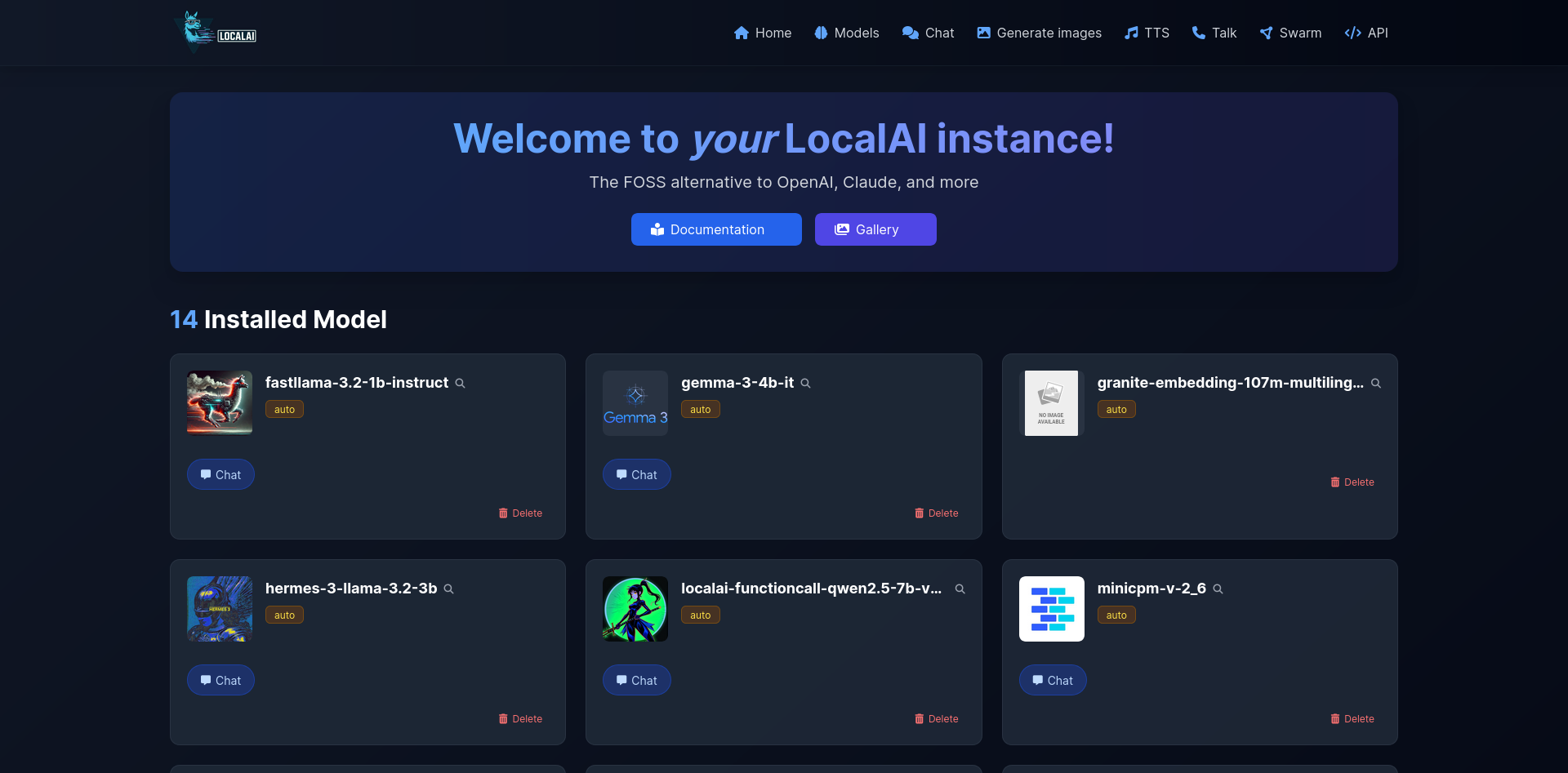

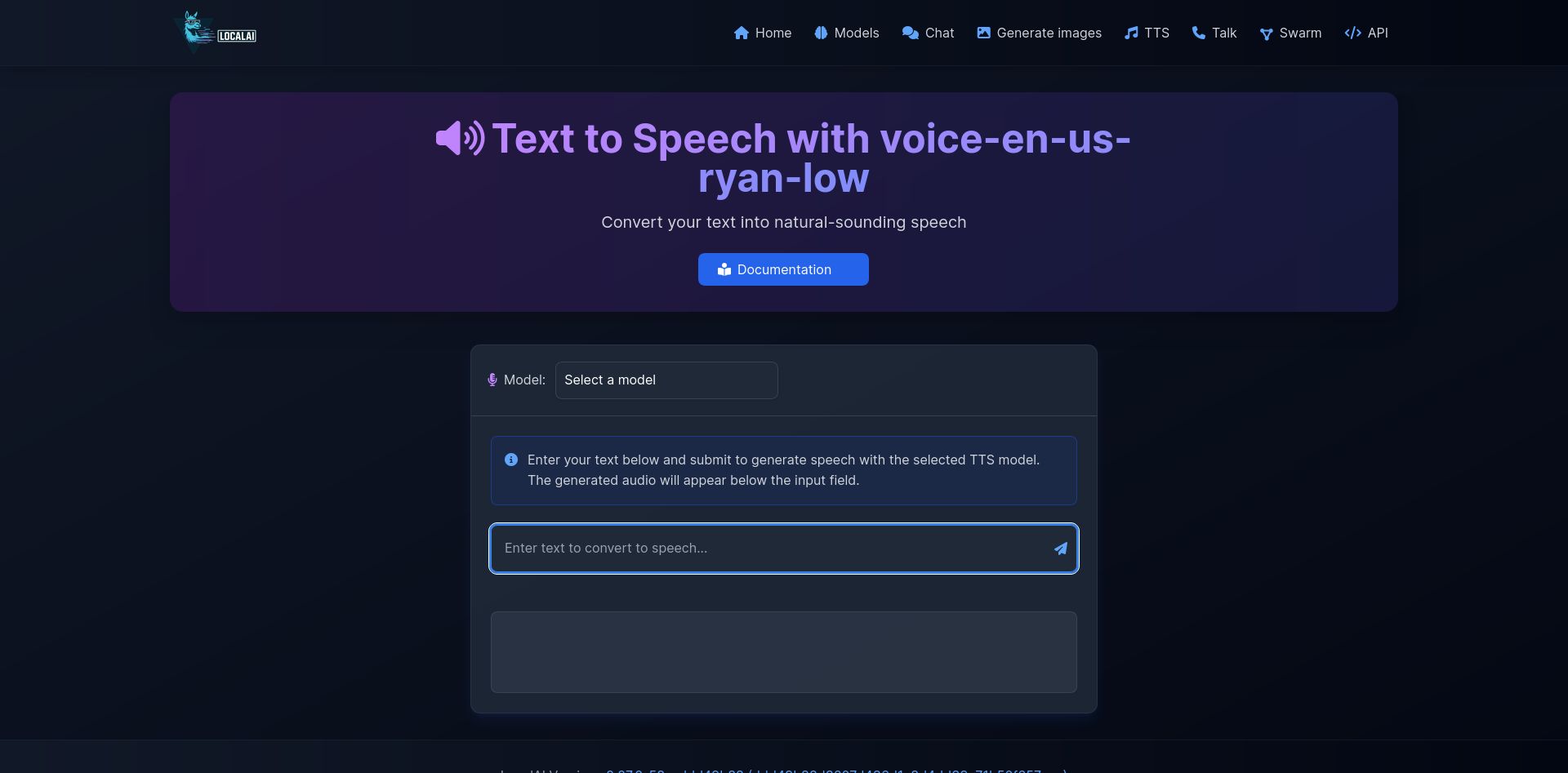

And the design? Work of art.

I immediately salivate. Let's go!

The project is called LocalAI. In the getting started page there is a command to install a CPU-only docker image. In my case I decided to go with the AIO one. AIO stands for All-in-one - that means some models are bundled with it.

# CPU version

docker run -ti --name local-ai -p 8080:8080 localai/localai:latest-aio-cpu

In my case I just needed to change the port to 8090 because 8080 is already being used by something else in my HomeLab.

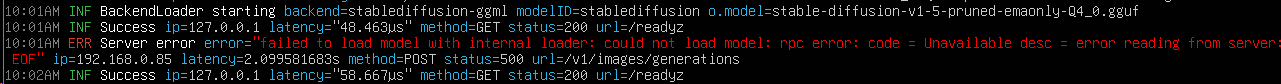

The installation went smoothly, I could open the web UI immediately. But when I tried to talk to it, nothing happens. So I checked the logs.

docker logs local-ai

Error

Googling this message pointed me to several possibilities, so I stopped trying. Instead I read the manual again and somewhere there it read:

If your CPU doesn't support common instruction sets, you can disable them during build

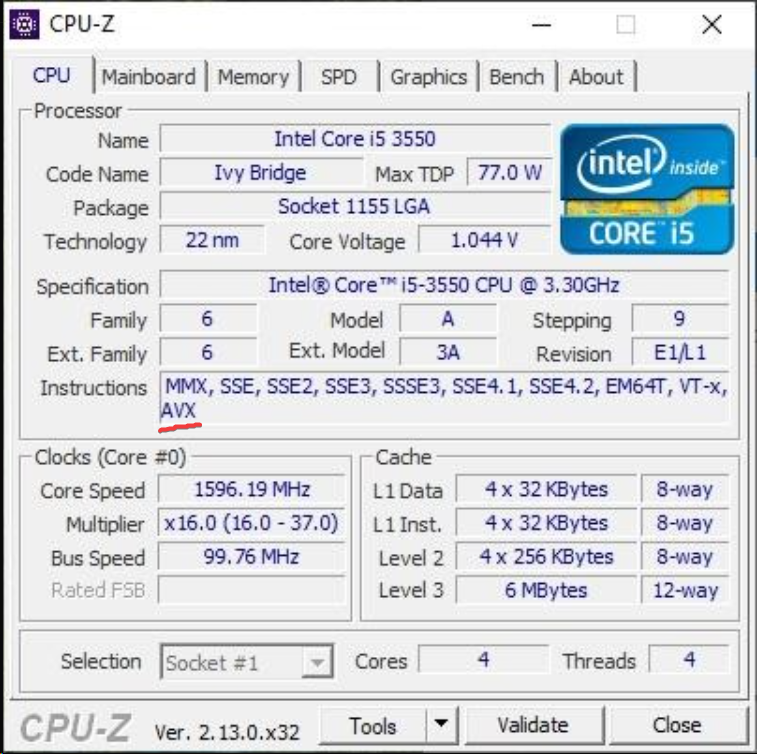

And because my HomeLab Proxmox Host Machine is older, this could probably be the cause? Let's verify that by trying the suggestion in the manual.

But first, let's do a little bit of reflection.

I know my CPU supports these instruction set

The manual says to disable the following flags.

CMAKE_ARGS="-DGGML_F16C=OFF -DGGML_AVX512=OFF -DGGML_AVX2=OFF -DGGML_AVX=OFF -DGGML_FMA=OFF" make build

I can see that my CPU supports AVX (the one that says `DGGML_AVX=OFF`), so I don't turn it off. The rest I typed as is.

docker run -ti --name local-ai -p 8090:8080 DEBUG=true -e THREADS=4 -e REBUILD=true -e CMAKE_ARGS="-DGGML_F16C=OFF -DGGML_AVX512=OFF -DGGML_AVX2=OFF -DGGML_FMA=OFF" localai/localai:latest-aio-cpu

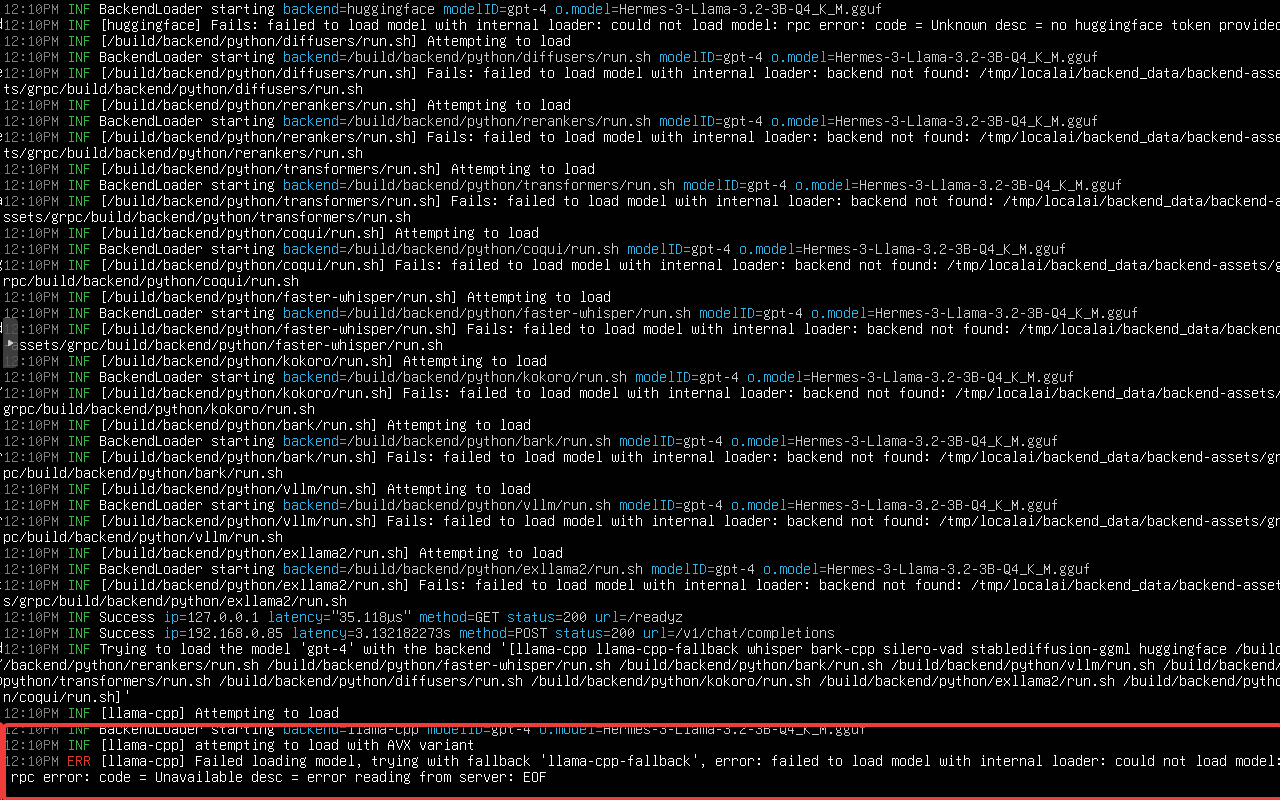

As soon as I run this, I see a lot is happening in the logs, because of course it is rebuilding the thing (I don't which part it's rebuilding exactly, but it's taking a while).

docker logs -f --tail 10 local-ai

I can't tell you how excited I was and because I was so sure this was going to work, why wouldn't it?

I just grabbed a cup of coffee and when I got back:

The same error.

Heavy sigh.

Summary

Perhaps this project promised too much. Perhaps my CPU is really old and not worth supporting. Or perhaps they will fix this issue.

One thing is for sure, I will watch this project closely and hopefully I'd be able to add it to my HomeLab services.

It would probably run in my desktop machine, I'm not going to test that because that's not how I want to use this product.

So, future me, I want you to try the above steps again after a while (fingers crossed), @remind me:6mos ;)